- 5 Posts

- 5 Comments

Joined 29 days ago

Cake day: March 9th, 2025

You are not logged in. If you use a Fediverse account that is able to follow users, you can follow this user.

There is a repo they released.

0·20 days ago

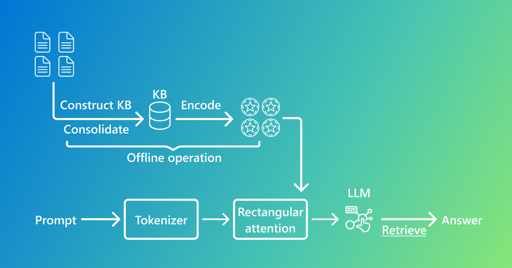

0·20 days agoI checked mostly all of em out from the list, but 1b models are generally unusable for RAG.

0·22 days ago

0·22 days agoi use pageassist with Ollama

4·27 days ago

4·27 days agoWelcome

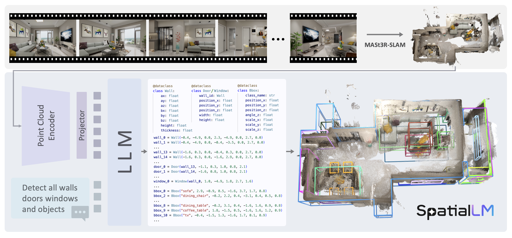

I think the bigger bottleneck is SLAM, running that is intensive, it wont directly run on video, and SLAM is tough i guess, reading the repo doesn’t give any clues of it being able to run on CPU inference.