- 88 Posts

- 14 Comments

How does this surprise anyone?

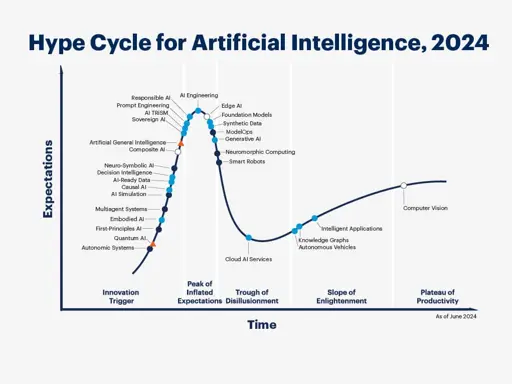

LLMs are just pattern recognition machines. You give them a sequence of words and they tell you what is the most statistically likely word to follow based solely on probability, no logic or reasoning.

0·27 days ago

0·27 days agoI feel like this should be cross-posted to [email protected].

summarize my client notes for creating statements of work

Huhhhhh… Last time I checked AIs were horrible at summarizing stuff: https://pivot-to-ai.com/2025/02/11/ai-chatbots-are-still-hopelessly-terrible-at-summarizing-news/

Granted, this article is about summarizing news.

There is a community here on Lemmy quite literally named “Fuck AI”. Don’t know if you have heard of it.

Either way, here is the link: [email protected]

11·2 months ago

11·2 months agowtf did I just read?

veeeeery powerful tool for self-education

You know these are pattern recognition machines, right? And not an actually reliable source of information, right? These are hallucination machine which sometimes gets right simply by virtue of such truthful statements being repeated often enough in the data for the machine to pick it up. It can give contradicting answers depending on what keywords you use. As such it is of little education value.

If anything, it makes self-education harder, as search results will be filled with perfectly SEOed LLM article with no quality standards and complete disregard for the truthfulness of the text written, poisoning the well of knowledge the internet was supposed to be. And as a bit of irony, these poor quality pattern-based texts will later be used in future machine learning databases, thus lowering the quality of said data, causing the machine’s own decay.

6·2 months ago

6·2 months agoAI just screams “lazy” and “lack of care”. If they don’t care that every article they put out has a completely unnecessary AI image in it, what guarantee do I have they care about any of the content on their website?

If they can’t afford an image then it’s better to have none than to give money to a company that will DDOS their servers with web-scrappers.

In my eyes, AI = Complete disregard for quality control.

4·2 months ago

4·2 months agoYup. These “AI” machines are not much more than glorified pattern recognition software. They are hallucination machines that sometimes get things right by accident.

Comparing them to .tar or .zip files is an interesting way of thinking about how the “training process” is nothing more than adjusting the machine sot that it copies the training data (backwards propagation). Since training works is such a way that the machine’s definition of success is how well if copies the training data:

- If the output is similar to the training data, then it is a success

- If the output is different for the training data, it is a failure

6·2 months ago

6·2 months agoWhy am I not surprised? People who know nothing about these things think we just created a brain simulation: they think it’s magic! While those who are tech-savvy know just what these things can and can’t do and know just how unreliable they can be.

What do I think? I think it’s normal to have wallpapers that aren’t related to the kernel of you OS and I’m struggling to make sense of how people setting their wallpaper to something they like could possibly be a problem.

Listen, I know that the term “AI” has been, historically, used to describe so many things to the point of having no meaning, but I think, given the context, it is pretty obvious what AI they are referring to.

It’s not even generative

It doesn’t need to be generative to be AI.

It’s a scraper that uses already available information to then “learn”.

That’s just every single “AI” product out there, that’s how they work: They scrape data from all over the internet, create a model that makes predictions based on that data. Chat-GPT doesn’t understand anything. It is simply a really complicated model which predicts what word is most likely to follow a given sequence of words. These “AI” aren’t inteligent, nor are they creative. They, by their very nature, stay as close as possible to the data they are given and never deviate, as a deviation would mean inaccuracy.

From an historical point of view, the word “AI” simply means “cool new technology”. That’s what it has been used to describe, while people think that AI means “artificial person”, like we see in the movies. So we need to be careful while using this word, because it can mean so many thing to the point that it has little meaning.

More spyware! Yay! But this time, barely disguised as an AI feature.