I have both but just use pihole as a local DNS server/forwarder. I bump into too many random times where sites or redirects don’t work properly since they get blocked.

Aka csm10495 on kbin.social

- 2 Posts

- 38 Comments

3·2 months ago

3·2 months agoConsider using containers. I used to think this way, though now my goal is to get down to almost all containers since it’s nice to be able to spin up and down just what the one ‘thing’ needs.

I’ve never had a case where running that fixed anything. I get it makes sense to verify system integrity but I’m guessing the OS already does that on its own once in a while.

31·2 months ago

31·2 months agoIf this is your fear, why not just have a will or something that specifically describes what to do and where to go?

How is signal considered part of the fediverse?

3·2 months ago

3·2 months agoHow does a doddle compare to a jiffie?

0·3 months ago

0·3 months agoThe fact that they changed the name to Azure Linux still upsets me. I get upset easily.

We use it at work. Seems mostly fine and similar enough to old CentOS and RHEL.

6·3 months ago

6·3 months agoImagine getting insurance to cover that broken window.

That relies on donations which may or may not come. I understand in a perfect world that makes sense, but in the real world even those foundations often rely on corporate muscle. Without that enterprise money, I’m not sure how they’d stand.

This is probably a minority opinion, but I think OSS prospers most when there is corporate muscle behind it.

A company with paid engineers that puts engineering time into fixing and bettering open source software can possibly be a good company.

Closed source ends up being the worst of all worlds. If there is an issue, you’re stuck waiting for someone else to possibly fix it. At least in open source, either you can try to fix it, or you can pay someone else to try to fix it.

At the end of the day, I think a lot of the Linux success actually comes down to this.

1·4 months ago

1·4 months agoEven in ipfs, I don’t understand discoverability. Sort of sounds like it still needs a centralized list of metadata to content I’d, etc.

1·4 months ago

1·4 months agoHashed by whom? Who has the source of truth for the hashes? How would you prevent it from being poisoned? … or are you saying a non-distributed (centralized) hash store?

If centralized: you have a similar problem to IA today. If not centralized: How would you prevent poisoning? If enough distributed nodes say different things, the truth can be lost.

4·4 months ago

4·4 months agoIn theory this could be true. In practice, data would be ripe for poisoning. It’s like the idea of turning every router into a last mile CDN with a 20TB hard drive.

Then you have to think about security and not letting the data change from what was originally given. Idk. I’m sure something is possible, but without a real ‘omph’ nothing big happens.

Wait till you accidentally overwrite the system python.

0·5 months ago

0·5 months agoThis is true on one hand, but on the other hand, the businesses still using Ubuntu 10.04 with its original kernel would like a word.

1·6 months ago

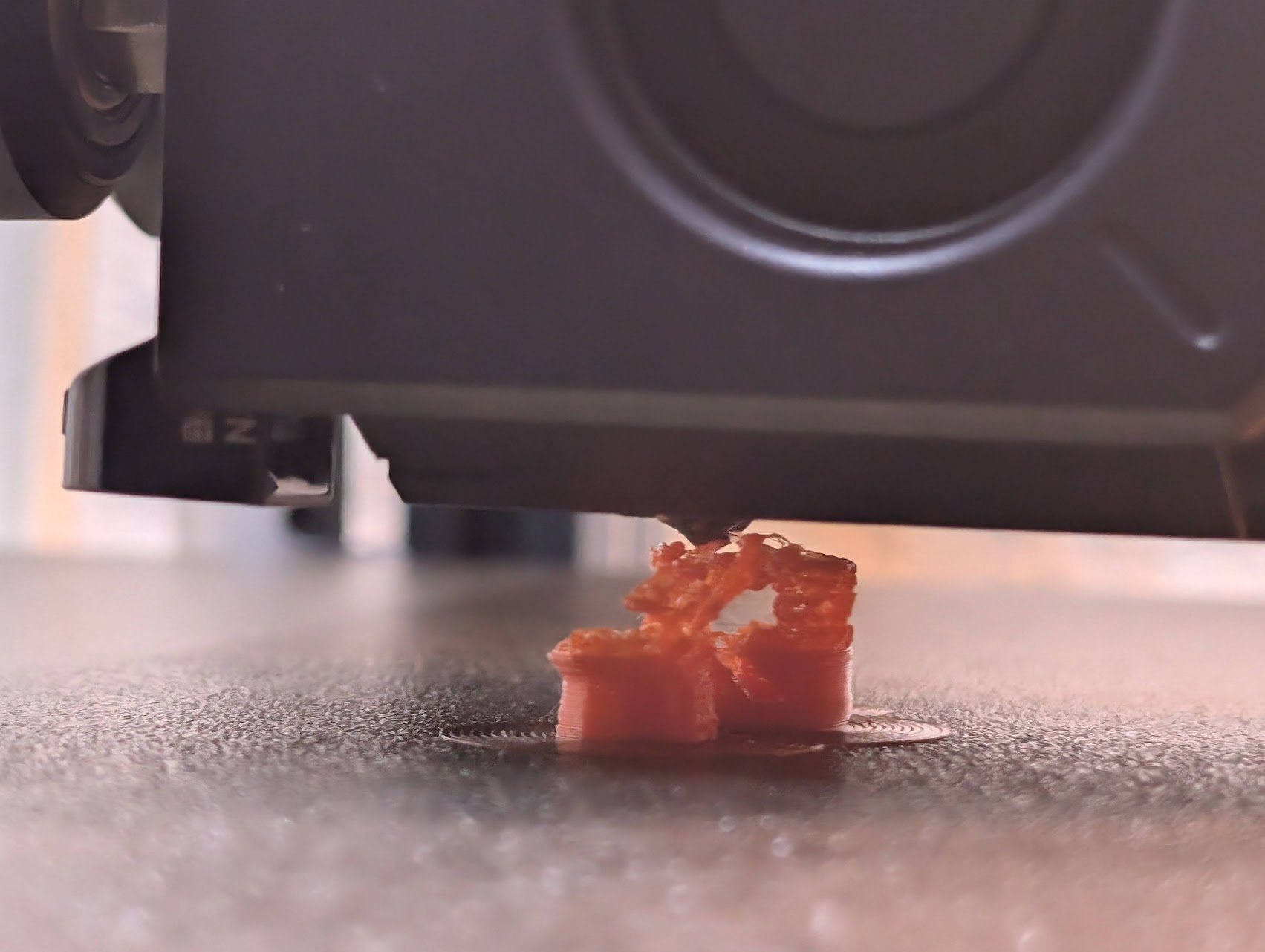

1·6 months agoYep it was in the dryer at 55C after the first print for several hours.

1·6 months ago

1·6 months agoWeird as heck but without any cooling it printed fully just fine. Thanks for the advice!

1·6 months ago

1·6 months agoHonestly not very different timing: maybe 25 minutes instead of 20 to be failing.

Trying more than 1 is an interesting idea. Too bad I don’t need more than 1 lol. Maybe I’ll print a benchy elsewhere on the plate. Though why wouldn’t more cooling help offset similarly?

5·6 months ago

5·6 months agoThis is an interesting thought. I wonder if the support tree is leading to more seams leading to issues. I’ll try it tomorrow with a different support structure that would change the seams.

Badeh security advice: use an alternative ssh port. Lots of actors try port 22 and other common alternatives. Much fewer will do a full port scan looking for an ssh server then try brute forcing.