I mean, they have closed caption devices you can borrow. They fit into the cupholder and display captions for you.

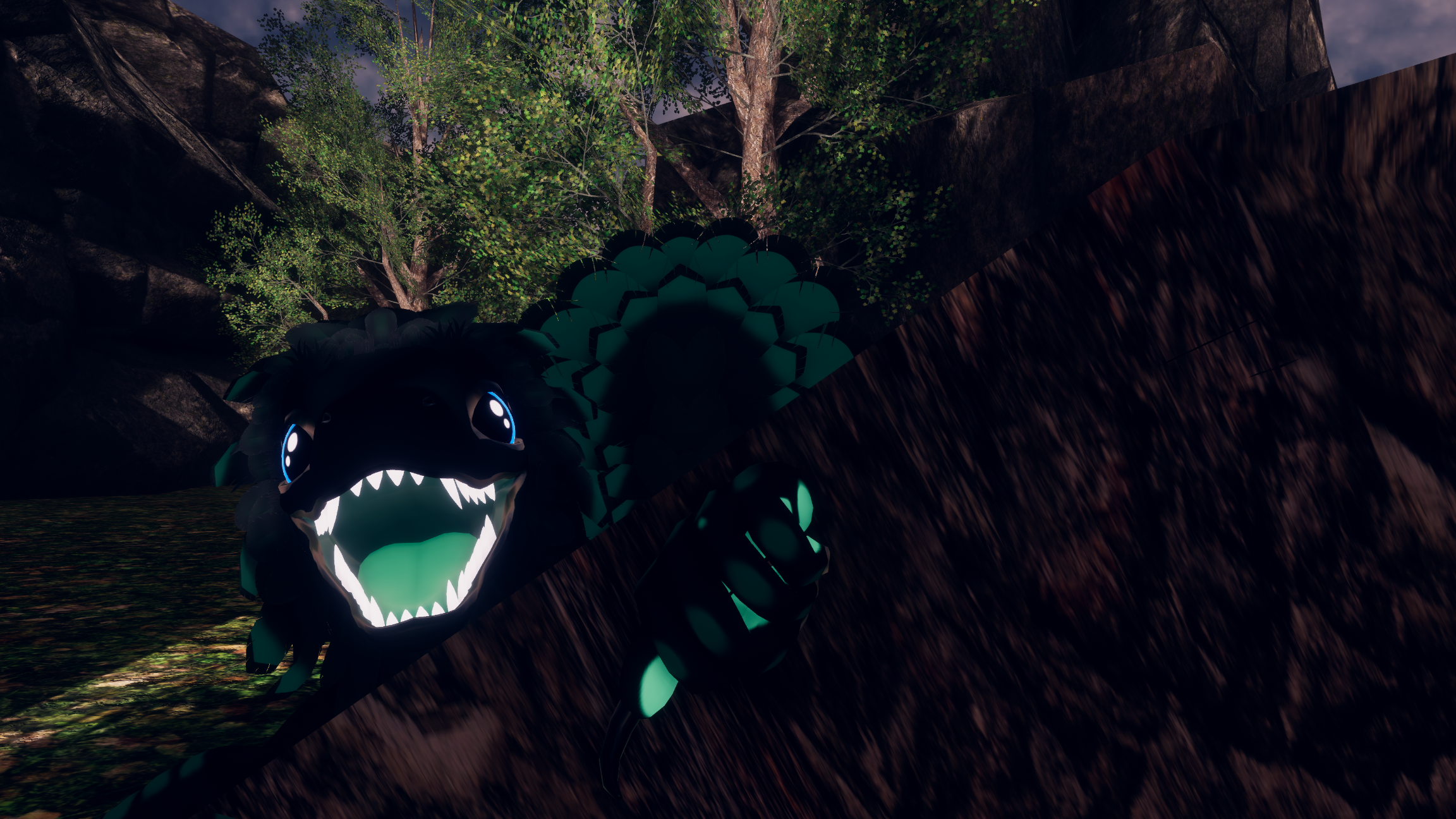

Secretly an opossum.

I mean, they have closed caption devices you can borrow. They fit into the cupholder and display captions for you.

Eh, I personally think it is, but you have to temper your expectations. It’s absolutely not Artificial General Intelligence, nor is it as flexible or capable of rapid learning as human intelligence (or likely most forms of living intelligence in general). However, I’d challenge the notion that it lacks intelligence entirely.

AI still “learns” from what you shove into it; it’s still creating algorithms to adapt to the information stream(s) it’s being exposed to which is not unlike how the human brain is believed to function. As such, I personally view it to be intelligent, but not anywhere near as intelligent as people think it is, and absolutely not in the way people want it to be.

One of the big differences that I see is that, afaik, AI is unable to learn while it’s running. You have to train it, run it, train it again based on user input, run it, train it again on more user input, and so on. Humans are more efficient at learning when they sleep and take breaks, but are still capable of learning things without “shutting down”, so-to-speak (not that we ever truly shut down outside of death, but that’s tangential).

Another difference is that, unlike “natural intelligence”, AI ends up being hyper focused on a specific task. It’s a bit like grabbing an ice cream scoop and removing a very specific part of the brain, let’s say the part responsible for imagining images, and then letting people interface with that alone. Yeah, it’s not gonna be good at parsing text because that’s not what it was designed to do. That’s a different part of the brain. The one you’re playing with right now is only good at visualizating images, so you’re gonna get pretty images, but good luck with getting it to do proper text, understanding proper body language, etc.

Finally, AI hallucinates like crazy. This is one where I’m not sure if we’re really that different from AI (I’ll explain in a moment); but it is a big issue when it comes to try to get AI to factually report information or perform logic tasks. You can ask an AI what 2+2 is and get 4 one day, 5 the next, 3 on Saturdays and then -2027346 on Christmas.

But wait! Doesn’t that make it unintelligent?

No.

Going back to the previous statement about AI being hyper-focused, it just means you’re not interacting with a part of the brain capable of logic; you’re interacting with something else. Maybe the speech center, idk.

However, there’s another element to this where AI doesn’t have a persistent “reality anchor” like we do. To an AI, fact and fiction are purely conceptual because it doesn’t truly exist in our world, it’s off in its own little digital world. Furthermore, the experiences it can gain from the training set are heavily limited compared to what living creatures experience. We have a constant stream of information that reminds us about what is real, who we are, what things look like, how things move and so on; and we get that data stream in 3 dimensions (arguably 2.5, but I digress) instead of 2. It’s like expecting a plant to thrive when given a trickle of water when it normally grows exclusively in a swamp. We ourselves tend to begin hallucinating when our senses become cut off from the outside world because our brains make up stimuli when the expected stimuli is missing. So… I’m not sure if the hallucinations are totally unreasonable, unrealistic or all that different from how we’d behave if subjected to the same environment; but at the very least it’s something that makes AI appear unintelligent.

That’s not to say that AI is a good thing or that it lives up to the hype. Fuck AI for being wildly overhyped, overused, and destroying people’s livelihoods in a world where “earning for a living” is still required for some god-forsaken reason (just a reminder that the phrase, “earn a living” implies you don’t deserve to live if you aren’t able to make money or have someone doing it for you). At the same time, however, it kinda is intelligent. I think people are just expecting way more from it that it’s capable of doing. It’s like people expect intelligence to manifest in grayscale when it’s more like RGBA or something.

Edit: sorry about the massive wall of text; I was fascinated with AI and its potential for a while, which meant it lived rent-free in my head at a series of philosophical questions about things like intelligence and what it means for humans that something designed to function as a series of virtual neurons would behave so similarly yet differently to humans. These were the kinds of conclusions I came to.

An interesting exception to this trend (so far) is the furry community. We’re too queer, weird, and unapologetically degenerate for most companies to want to have anything to do with us. There’s a reason why you get furries being thirsty for Tony the Tiger, and horniness is only part of the picture. It’s also a shield that keeps the corpos out.

Not when the author proudly flaunts the fact that every dime spent on her works goes towards eradicating trans people. (Yes, that was a bit hyperbolic, but she absolutely has stated that if you’re enjoying Harry Potter then you’re helping her fight against trans people).

To be fair, I honestly enjoy Harry Potter. However, I don’t want to give people the temptation to buy books or go see movies.

No offense intended, but… Attempting to create Harry Potter themed communities while JK Rowling holds the rights to them, on a social media platform with a huge trans/enby population, doesn’t seem like it would go very far.

As much as I enjoy Harry Potter, fuck Rowling and I will be looking forward to the day she dies or sells the IP.

This is already kind of a thing. There used to be a twitch channel where they had news generated with chatgpt, presented by AI-voiced characters, but I can’t remember what the channel was called. It may not even exist anymore.

I’m screaming. It’s perfect. I want it so bad, but it’s so expensive. How come rich people get to live in zoo enclosures? How come rich people get to live in natural history museums? Fuck you, I wanna live there. Literally me_irl:

I only see people as an enemy if they’ve declared themselves as such. I’m not gonna make the first move, life is too short to make enemies with everyone I meet. That said, if you’ve got a swastika tattooed on your forehead then I’m gonna take that as a declaration that you’re my enemy.

You forget about furry porn. Furries draw porn because we like it, not because we make money at it (but money allows those of us who do it to actually potentially live off it).

Edit: or to put it another way, someone drew furry porn and discovered they could make money doing it, not the other way around.

Yes, but not that one. I always loved Floop’s castle in Spy Kids. I dunno if there’s a name for that style of architecture, but sculpted interiors and exteriors make me very happy.

Honestly, more people should embrace “theme park houses”. Make life interesting, yanno?

Imo it has less to do with photorealism vs non-photorealism and more to do with pbr (physically based rendering) vs non-pbr. The former attempts to recreate photorealistic graphics by adding additional texture maps (typically metallic/smooth or specular/roughness) to allow for things ranging from glossiness and reflectivity, to refraction and sub-surface scattering. The result is that PBR materials tend to have little to no noticeable difference between PBR enabled renderers so long as they share the same maps.

Non-pbr renderers, however, tend to be more inaccurate and tend to have visual quirks or “signatures”. For an example, to me everything made in UE3 tends to have a weird plastic-y look to it, while metals in Skyrim tend to look like foam cosplay weapons. These games can significantly benefit from raytracing because it’d involve replacing the non-pbr renderer with a PBR renderer, resulting in a significant upgrade in visual quality by itself. Throw in raytracing and you get beautiful shadows, speculars, reflections, and so on in a game previously incapable of it.

Does this run on a raspberry pi 1 or 2? I can’t remember which one I have, but I barely use it so it’d be cool to have something to use it for.

Google is still working on improving the Terminal app as well as AVF before shipping this feature. AVF already supports graphics and some input options, but it’s preparing to add support for backing up and restoring snapshots, nested virtualization, and devices with an x86_64 architecture.

This is the part I cared about. Can it run x86_64 programs, or is it just an ARM-compatible version of Debian?

If it can actually run x86_64 programs on ARM devices, then that’s kinda fucking sick and would likely help the world transition to ARM. Like, fuck Google, but this sounds like a good thing, maybe?

Bat Boy? Is that you?

Oh, I think you misunderstand. I’m not sending the audio into audio, I’m sending audio into video. So the signal from the guitar goes into the video connector (there aren’t any built-in speakers). Why? It might look cool.

Do you know what the tolerances are on connectors like VGA, coax, and bnc? My monitor has VGA and BNC, so BNC might be easier to use (fewer intermediate steps, more control due to separate sockets for sync, r/g/b, etc). I’m curious if you might know how high the voltage can go before I run the risk of frying something.

Also, my guitar is an acoustic-electric with a preamp, which would probably make a difference.

I’ll take a look at it. The CRT is a bit sentimental to me (it’s the same model as the one my first PC had, managed to find one on eBay in good condition after like, a year of searching) which is why I’m concerned about blowing it up. However, I might see if any electronics recycling places in my area have a shitty, beat-up CRT TV they’d be willing to part with. That said, I discovered recently that most of the remaining recycling places in my area are run by computer enthusiasts and tend to sell or hold onto anything with any value like CRTs though, so wish me luck.

Kinda genius really. Into old PCs but don’t wanna pay eBay prices for them? Become an electronics recycler and then people will pay you to take their old SGI workstations and Sony BVMs.

All we need to do is build a similar setup and then find a guitar and a CRT and see what happens

Edit: actually, I’ve got a guitar and a CRT and maybe half of the pieces there. The big thing I’m concerned about is destroying the CRT. I have no idea how sensitive CRTs are or how much power is coming from a guitar.

I’m unironically amazed that Texas is supposedly perfectly average while California is below average. I bet it’s all the Texas engineers offsetting the Texas bigots, while the California techbros offset the California tech geniuses.

(I’m saying this as a Texan)

It makes me sad that I was born in a country that doesn’t give a fuck about the environment when I read things like this. I’d love to do projects like this for a living, but… no, wrong country lmao.

Edit: lemme know when a place like Norway takes trans refugees from the US. I’d love to spend my time walking in forests and building birdhouses.