The best part of the fediverse is that anyone can run their own server. The downside of this is that anyone can easily create hordes of fake accounts, as I will now demonstrate.

Fighting fake accounts is hard and most implementations do not currently have an effective way of filtering out fake accounts. I’m sure that the developers will step in if this becomes a bigger problem. Until then, remember that votes are just a number.

The nice things about the Federated universe is that, yes, you can bulk create user accounts on your own instance - and that server can then be defederated by other servers when it becomes obvious that it’s going to create problems.

It’s not a perfect fix and as this post demonstrated, is only really effective after a problem has been identified. At least in terms of vote manipulation from across servers, it could act if it, say, detects that 99% of new upvotes are coming from a server created yesterday with 1 post, it could at least flag it for a human to review.

You can buy 700 votes anonymously on reddit for really cheap

I don’t see that it’s a big deal, really. It’s the same as it ever was.

Over a houndred dollars for 700 upvotes O_o

I wouldn’t exactly call that cheap 🤑

On the other hand, ten or twenty quick downvotes on an early answer could swing things I guess …

For the companies who want a huge advantage over others, $100 is nothing in an advertising budget.

I have a small business and I do $1000 a week in advertising.

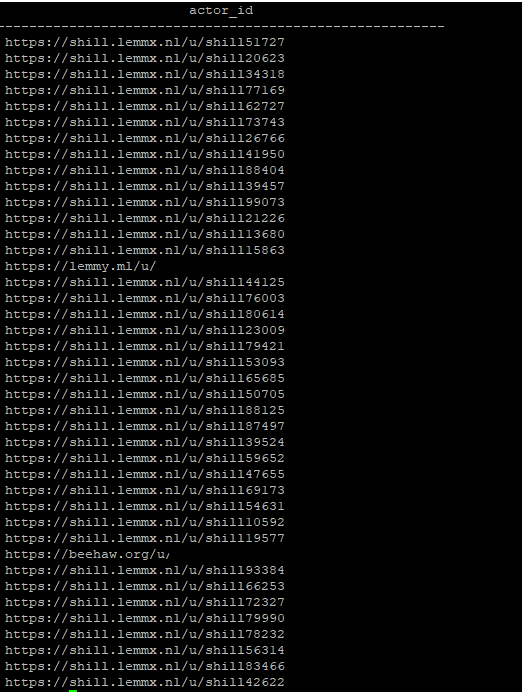

In case anyone’s wondering this is what we instance admins can see in the database. In this case it’s an obvious example, but this can be used to detect patterns of vote manipulation.

Web of trust is the solution. Show me vote totals that only count people I trust, 90% of people they trust, 81% of people they trust, etc. (0.9 multiplier should be configurable if possible!)

Get rid of votes. They suck.

This was a problem on reddit too. Anyone could create accounts - heck, I had 8 accounts:

one main, one alt, one “professional” (linked publicly on my website), and five for my bots (whose accounts were optimistically created, but were never properly run). I had all 8 accounts signed in on my third-party app and I could easily manipulate votes on the posts I posted.

I feel like this is what happened when you’d see posts with hundreds / thousands of upvotes but had only 20-ish comments.

There needs to be a better way to solve this, but I’m unsure if we truly can solve this. Botnets are a problem across all social media (my undergrad thesis many years ago was detecting botnets on Reddit using Graph Neural Networks).

Fwiw, I have only one Lemmy account.

I see what you mean, but there’s also a large number of lurkers, who will only vote but never comment.

I don’t think it’s unfeasible to have a small number of comments on a highly upvoted post.

You mean to tell me that copying the exact same system that Reddit was using and couldn’t keep bots out of is still vuln to bots? Wild

Until we find a smarter way or at least a different way to rank/filter content, we’re going to be stuck in this same boat.

Who’s to say I don’t create a community of real people who are devoted to manipulating votes? What’s the difference?

The issue at hand is the post ranking system/karma itself. But we’re prolly gonna be focusing on infosec going forward given what just happened